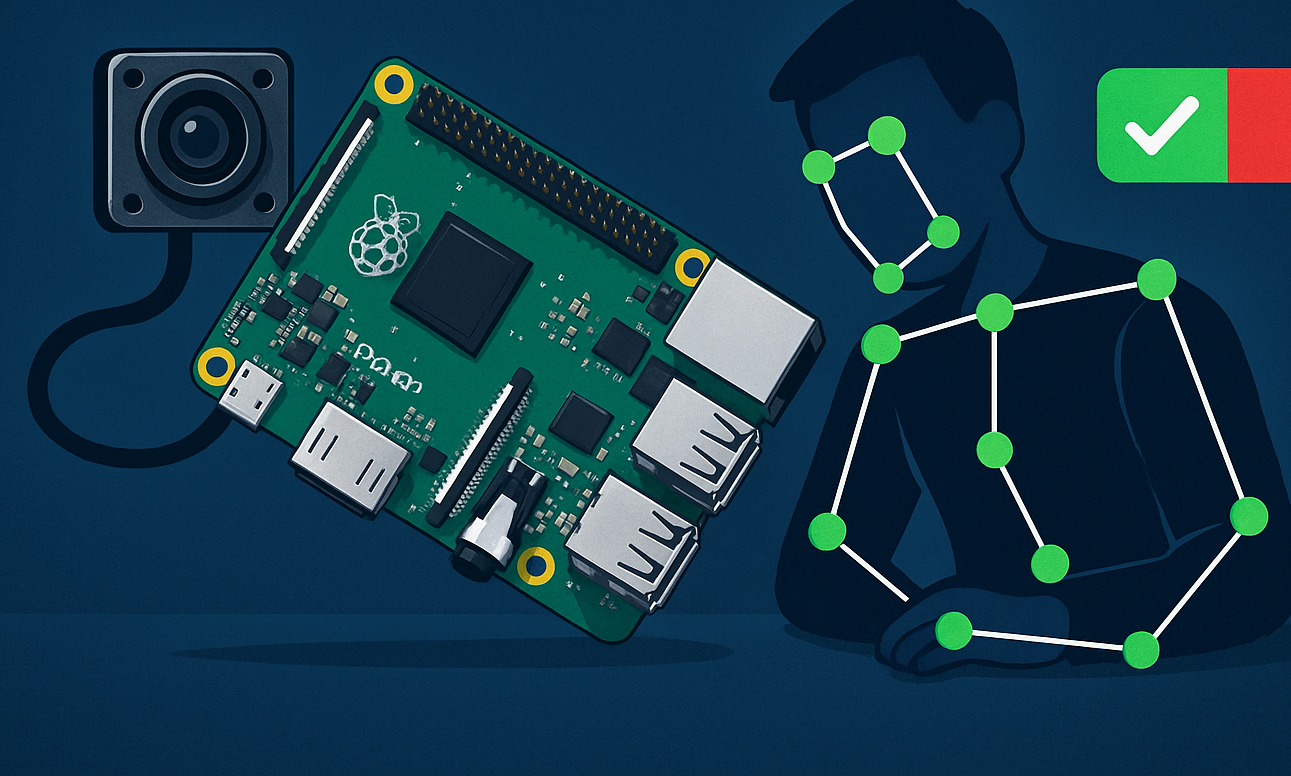

Real-Time Pose-Based Attention Analysis

Built an embedded system that captures live video of a student, runs on-device pose estimation to extract 17 body keypoints, applies attention heuristics (head tilt, drooping, hand-raise), and flags “Attentive” vs “Not Attentive” in real time all on a Raspberry Pi.

Project Sections

Overview

The Real-Time Pose-Based Attention Analysis project delivers an end-to-end solution for monitoring student engagement using only a Raspberry Pi 4B+ and a USB camera. The system captures live video, runs on-device pose estimation via a lightweight TensorFlow Lite MoveNet model, applies a set of human-interpretable heuristics to assess attention, and logs all relevant data for future refinement.

Features

- Real-Time Pose Estimation: ~15 FPS on Pi

- Attention Heuristics: Head tilt, drooping, hand-raise detection

- On-Device Processing: All computations performed on Raspberry Pi

- Lightweight Model: MoveNet model optimized for embedded systems

- Visual Overlay: Annotated live video with keypoints and status

- Real-Time Inference: Pose estimation and attention analysis in real-time

- Data Logging: Captures and stores attention data for analysis

Technologies Used

Programming Language: Python

Libraries: imutils, OpenCV, TensorFlow Lite

Machine Learning Model: MoveNet

Hardware: Raspberry Pi 4B+, USB Camera

My Role

Architected end-to-end pipeline: video capture → preprocessing → TFLite inference → feature extraction → attention analysis → data logging

Integrated threading (imutils) for smooth frame rates

Implemented CSV logging of timestamp, head-tilt, attention status, feedback, and raw keypoints for future model training

Optimized dtype and preprocessing to match the quantized MoveNet model

Challenges and Solution

Pi-level Performance: Baseline ~5 FPS; solved via threaded capture.

Model DType Mismatch: Switched preprocessing from float32→normalized to raw uint8 to satisfy quantized TFLite

Heuristic Tuning: Iteratively adjusted tilt/position thresholds on real data to minimize false positives

Outcomes

Prototype Delivered: Complete end-to-end demo running on Pi

Dataset Collected: > 10 000 frames logged with features & labels for future classifier training

Performance Benchmarks: 15 FPS real-time inference with 17 keypoints

Future Enhancements

Custom Classifier: Train an ML model on the logged data to replace hand-tuned rules

Additional Cues: Add body-lean, eye-gaze, facial-expression analysis

User Interface: Develop a web dashboard for real-time monitoring and analytics

Cloud Integration: Stream data to a server for centralized monitoring and analytics

Repository Link

Explore the code and Data in the GitHub repository: GitHub - Real-Time Pose-Based Attention Analysis on Raspberry Pi